CRIM’s computer vision team is called upon to solve all kinds of image or video analysis problems related to fields as varied as industrial inspection, dermatology, microscopic imaging, 3D imaging, and so on.

Recently, she has become interested in the problem of automated animal re-identification, which plays a crucial role in understanding ecosystems. This field is increasingly being studied, not least because of the growing use ofcamera traps, which capture large volumes of images of wild animals as they pass in front of cameras. These image volumes are too large for researchers to process manually. Research platforms such as wildme.org, an important tool for citizen science among other things, are also in great need of image analysis algorithms to enable their users to feed databases on the evolution of populations of multiple species.

Context

The Mingan Island Research Station (SRIM) has contacted the CRIM team with a proposal to use its blue whale images to automate the photo-identification process. Whale photo-identification is a demanding activity, requiring time and cutting-edge expertise:

The same whale can be seen in one of the following three photos. Can you tell which one it is and thus pass the photo-identification test? 😁

A)

B)

C)

The photo corresponding to the unknown whale is photo C, taken three years apart from the first photo. The idea is to check that the blotch patterns between the two individuals are the same [1]:

Naturally, the development of an automatic photo-identification method could greatly assist biologists in the search for photo matches, for example by reducing the list of possible candidates.

Over the last 40 years, SRIM has accumulated thousands of images of these cetaceans, along with their metadata. Can all this information be used by artificial intelligence techniques, more specifically computer vision techniques, to support researchers in their photo-identification activities? And which methods are the most promising?

Automated photo-identification: approach and solutions

The literature review on the subject has yielded few results: this is a problem that has received very little attention in the scientific literature. One might think that an algorithm developed to photo-identify another species of whale could serve as a starting point; however, the photo-identification process can be very different from one species to another, sometimes it’s the tail that’s distinctive, sometimes it’s the head or the dorsal fin, and so on.

It’s the stains on the blue whale’s skin that identify it, and the best algorithmic lead lies in a family of techniques that extract and compare local image features.

Local features are signatures attached to specific points in an image (e.g. belonging to an object) that have invariance properties, i.e. they have approximately the same value even if the appearance of the object in the image changes markedly (different viewpoint, different illumination, etc.).

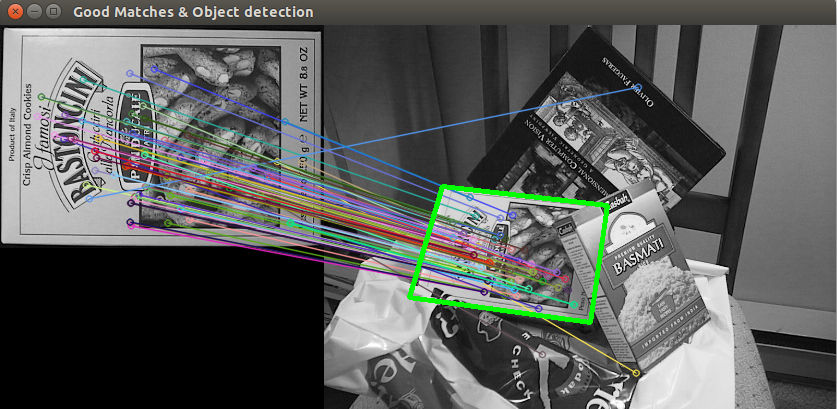

The following figure illustrates the idea, where visually similar (locally) points may have been connected by lines because their signatures are similar, even if the object has a different appearance (rotation, inclination, smaller size):

In this context, like the box of cookies above, two images of the same whale will have several points of correspondence, and the more numerous the correspondences, the higher the chances that the images come from the same individual.

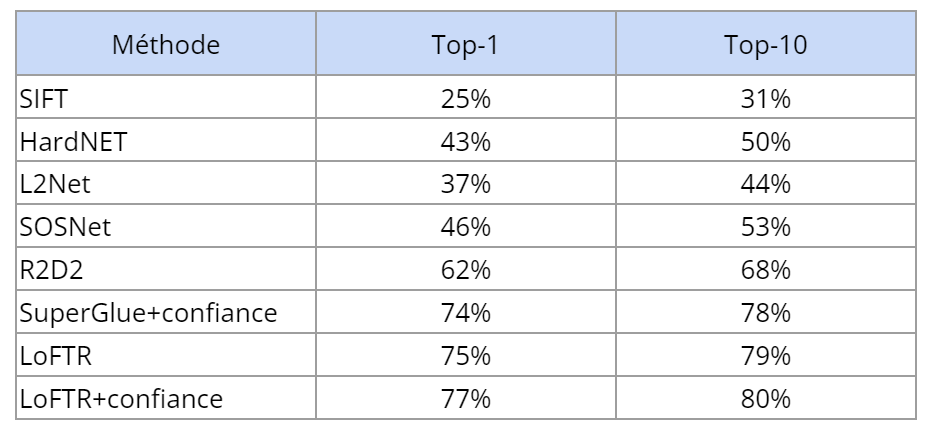

We evaluated several local feature extraction and comparison algorithms on a good-sized dataset (807 different individuals, 3129 images in total). They can be classified into three categories:

- Classical: signatures arehand-crafted by vision researchers and compared by Euclidean distance, such as SIFT;

- Based on neural networks (NN) for generating match point signatures;

- End-to-end RN architectures that accept a pair of images as input and produce the match list as output.

The results are shown in the following table. If, for an image analyzed, we consider the ten most likely whale candidates given by the algorithm, the top-10 criterion is the proportion of individuals that are found in this list. The top-1 criterion indicates the proportion of individuals that rank first in the list.

Two points are worth highlighting:

- The variability in the performance of the methods tested is high, which probably indicates that the problem is difficult to solve;

- The best results are achieved by methods offering an end-to-end approach (LoFTR, Superglue). Other approaches break the problem down into three stages (search for points of interest in each image, signature calculation using neural networks, search for similar signatures between two images).

Note that end-to-end methods also provide a confidence level for each match, which gives information on the quality of the match: using this information gives slightly better performance than simply using the match count.

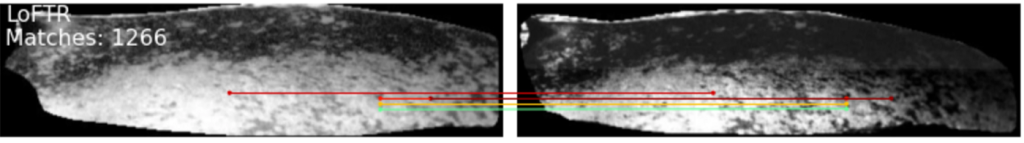

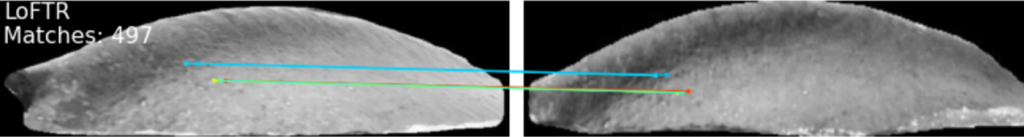

The latter method, LoFTR [2], gives impressive results in terms of its ability to find matches. Close examination (below) clearly shows that the points connected by the colored lines correspond to similar areas, so the pair of images comes from the same whale:

Another example:

For further details, interested readers can also consult a publication [3] presented at the “Computer Vision for Analysis of Underwater Imagery (CVAUI) 2022” conference last August. The master class held on September 23, available below, explains in greater detail the approach taken to find a solution to this problem.

Conclusion

Thanks to SRIM’s rich database, CRIM experts have been able to develop image matching algorithms for blue whales that are promising despite the difficulty of the task. But beyond whale photo-identification, many other image comparison and object-in-image detection problems can benefit from the powerful computer vision tools mentioned in this post.

References

[1] R. Sears et al.Photographic identification of the blue whale (Balaenoptera musculus) in the Gulf of St. Lawrence, Canada .”

[2] J. Sun et al. “LoFTR: Detector-Free Local Feature Matching with Transformers“.

[3] M. Lalonde et al. “Automated blue whale photo-identification using local feature matching“.