Powered by the latest technological advancement in artificial intelligence and machine learning, deepfakes offer automated procedures to create fake content that is harder and harder for human observers to detect¹.

The technique was developed in 2016 in the research community and gained public attention when fake X-rated videos putting in display public figures appeared on Reddit. Any digital content disseminated online can be deepfaked. You guessed it, deepfake is often done with malicious intent to cause harm to a public figure. The danger with deepfake goes beyond X-rated videos.

The video shown below, illustrating two toddlers that are visibly friends, became viral on social media, disseminating a positive message of tolerance and friendship. However, over the course of the last US presidential campaign, it was tampered in a way that showed one toddler running away from the other. The new video was titled Terrified toddler runs from racist baby and shared by President Trump, sending a message of fear and intolerance.

Original video on CNN showing two toddlers that are visibly friends.*

Tampered video showing one toddler running away from the other.*

In 2019, there were 15,000² deepfake videos in circulation online, an 83% increase in less than a year! This trend is still going strong as deepfake software hit mainstream.

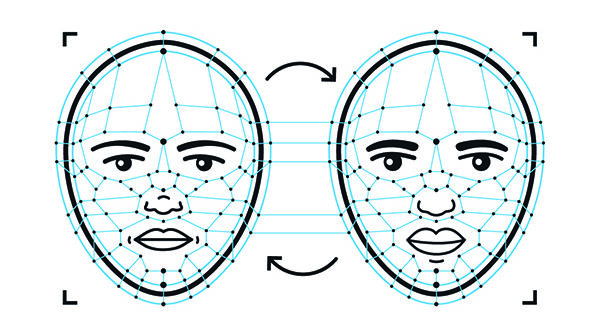

Deepfake can include adding, editing or removing objects from an original video or image. Deepfake works especially well with human faces as they all share the same features (eyes, nose, mouth, etc.). The algorithm “learns” the original face and from there, one of two things can happen: the face from a source photo is swapped onto a face appearing in a target photo, often that of a public figure; or the face of a public figure is rigged in a way that the algorithm makes it say things that are out of character. As this technology grows in sophistication, experts fear that it may become increasingly difficult to tell the difference between real and fake images.

These counterfeit images and videos may be damageable to society and individuals. They can be used for defamation and extorsion.

– Mohamed Dahmane, researcher in computer vision at the Computer Research Institute of Montréal (CRIM)

In 2017, Canada’s Department of National Defence (DND) launched the Innovation for Defence Excellence and Security program (IDEaS), a $1.6 billion investment over 20 years. To foster collaboration between innovators and provide opportunities to work with government, this program offers technological challenges for research organisations and businesses.

CRIM experts chose the Verification of full motion video integrity challenge. The desired outcome is a suite of AI-based tools and methods for detecting tampered videos in circulation and for telling fake images apart.

The Fake Detector Prototype

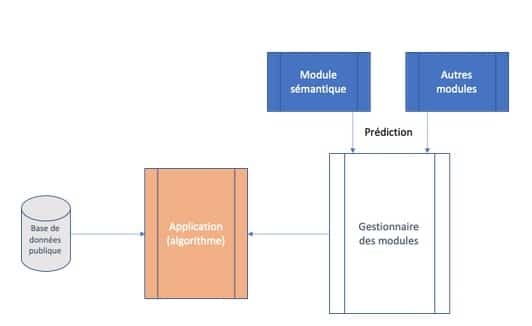

The prototype and its plugins were built using a novel dataset of 10,765 generated tampered images**. The semantic splicing plugin, developed by researcher Mohamed Dahmane, classifies the pixels in each image and detects which ones have been tampered.

Figure 1. CRIM Detection Flow Chart

Here, the picture shows a large aircraft to which was added a fake plane. The goal was to determine whether the semantic plugin could detect the pixels of the foreign object and deduct that the image was forged.

Figure 2. The original image to which was added a fake plane

Figure 2. The original image to which was added a fake plane

As shown below, the semantic splicing plugin detected the pixel contour of the small plane with a high Intersection over Union (IOU)*** of 77%, meaning that it identified 77% of the tampered pixels that were manually flagged by experts.

Figure 3. The CRIM semantic plugin detects the pixels of the fake plane.

In short, the CRIM deep learning solution has helped detect rigged or tampered pixels of images significantly.

Nevertheless, the fight against deepfake is far from over. With every detection solution comes greater sophistication from deepfake software makers. It’s a game of cat and mouse, concludes Mohamed Dahmane.

Time and effort must be invested continuously to improve the detection model. With the support of National Sciences and Engineering Research Council of Canada (NSERC), CRIM will pursue research around deepfake detection, not only in the context of fake news but also in the judiciary sphere. In fact, Mohamed Dahmane hopes that one day, algorithms developed by CRIM can be used to certify the authenticity of digital content used in a court of law. Stay tuned!

Figure 2. The original image to which was added a fake plane

Figure 2. The original image to which was added a fake plane